The Singularity Begins (with Pairing)

Knock knock. Who's there? Existential dread.

Intro

I should begin with an apology to our readers for the gap in content since October. It’s a confluence of factors, many good, some bad, some just the function of entering the holiday season with a lot of family activities that require all the thought, presence, and interaction it deserves.

So, with a belated “Happy New Year!” and all those pleasantries aside, the dust is finally setting, and I have a few heretical topics that have been brewing in the recesses of my mind beginning with: “The Singularity.”

I’m warning you now, this article articulates a fast-approaching reality that some may find unsettling.

I also don’t want to try to soften the message by spending the next few thousand words describing an existential scenario, then try to cap it with a few hundred words trying to frame up a “…but don’t worry!”

Navigating the future will require action - just as it always does - to get through things (hopefully unscathed, and ideally in better shape than you started.)

-DH

The Singularity

For those new to the concept, “The Singularity” is the moment human cognition stops being the primary bottleneck in complex systems.

That is, once intelligence can be paired, multiplied, and iterated faster than humans can individually comprehend or collectively govern, progress detaches from human intuition.

For those who may have heard “Singularity” before and are slightly confused by my framing, people sometimes think it’s some kind of “melding” of human and machine intelligence into one. Others think it’s some “singular” invention (AGI/ASI) which fundamentally changes everything.

Both are understandable given the term “singularity” - but really it means that progress and improvement happen so incredibly fast, faster than we can keep up with, that to us it feels like things are happening “instantly”.

Dr. Ben Goertzel has a wonderful way of expanding on this topic:

I’m probably not on an island when I assert that everyone is feeling this same sense that time is accelerating with every passing year, even if you live outside of all this technology/AI bubble stuff.

I don’t believe this is just some generic function of getting older, or a reflection of how unstable local and geopolitics are at the moment, I really do believe that perception and action shapes reality, and there are entities (individuals, corporations, governments, and now agentic agents) who work tirelessly towards their individual aims.

In short, time isn’t just “feeling faster”, it’s causality getting denser.

For most of human history, reality was shaped by a small number of actors, making relatively slow decisions, over long feedback loops.

Today, reality is being shaped by millions of concurrent intention engines… humans, institutions, markets, algorithms, and now autonomous or semi-autonomous agents—each executing goals continuously, adaptively, and often asynchronously.

When enough of those loops overlap, the rate of meaningful state change spikes, even if the clock doesn’t.

Surfing

Before I dive into the “macro” aspects of the singularity, I want to present my own anecdote as someone who is hyper-sensitive to changing technology.

The singularity often gets summarized as this wave you see for a moment on the horizon, and then before you know it, it’s washing over and you find yourself either surfing along or being swept away in the undertow.

But if we pause the metaphor at the right moment, we know that for the surfers, there’s so much more nuance that begins way earlier in the process.

Steps like lining up the board, pre-paddling, and standing up at the optimal time set them up for success, and they even have exit strategies for riding the wave as long as they feel comfortable, dipping out to avoid any serious wave crash.

Well, I consider myself a proficient surfer, and in the last six months I’d say I’m now well past the point of paddling. I’m on the board, and getting the early feedback through my legs about just how monstrous of a wave this is about to be.

Slipping

When I started Digital Heresy three years ago, I had my brain wrapped around the space pretty tightly. I could fully comprehend and articulate transformer and attention mechanisms that were behind this exciting new leap in AI capability, and it was exciting to keep up with AI news.

There was enough room to reflect between research papers and model releases that each new concept could be absorbed, whether it was fine tuning, quantizing, or supplementing AI knowledge through retrieval augmented generation (RAG).

Then things got a little more slippery - more research papers were being published at elevated rates, and more frontier models were entering the arena.

You got to see many of those “First Chat” sessions chronicled here on Digital Heresy. On the back-end, I had developed a special AI crawler that organized AI-related research papers and summarized them just to help me narrow down the ones worth reading.

The density kept increasing. The meaningful state changes were too numerous to keep up with. Models were coming out more frequently, and in some cases in triplicate with all the “mini” and “nano” variants.

Nowadays - the landscape is changing so fast, that I don’t even want to look at my already superhuman AI-assisted research backlog. I’m watching these “AI News” channels produce videos that span a solid hour and can cover 5-10 new platforms or products every. single. week.

Gravity Shift

I open with this quote from Shaw’s 1903 play ‘Man and Superman’ because of how it’s relevant here, but also precisely because of how often it is misapplied to disparage educators:

"Those who can, do; those who can't, teach"

- George Bernard Shaw

Fun fact: Shaw's original context actually referred to revolutionaries, not teachers; suggesting that those unable to actively participate in revolution (due to age or injury) could still contribute by teaching others.

In reality, teaching is a distinct and demanding skill that requires deep subject knowledge and the ability to communicate complex ideas effectively to others.

On the other hand, “doing” or being an expert in a field does not automatically make someone a good teacher - in fact - experts often struggle to teach beginners due to the "curse of knowledge," where they assume familiarity with concepts they’ve mastered long ago.

The curse I feel is one of simultaneity - that I both teach and do - and the revolution we’re collectively participating in is a technological one where I am trying to learn, convey, and practice - all at once.

This is why you’ve seen more creative bleed-through in Digital Heresy. Sure, I love evaluating AI models, pressing them about ethical and philosophical topics, but I also love wielding this incredible technology to unlock a million other creative endeavors I’ve had on the backburner for quite some time. The two Digital Heretic album releases are the most overt examples of this.

This shift - from just talking about new AI concepts, and how clever chat bots have become in the realms of ideation, self reflection, philosophy, reasoning, and strategizing - to now becoming actionable intelligence with agency, is the “gravity pull” that I (and many others) have been feeling as of late.

This is what I mean by “I’m on the board starting to surf”, and why I feel we’ve really turned the corner on the “novel AI” bubble into something truly useful.

And this, dear reader, is the final confounding factor for why my time spent researching and reporting for Digital Heresy has tapered.

It’s not burn out, or having some lack of things to say - it’s all the other doing. It’s whatever free time I have left between family and work being vested evermore into writing with reality instead of about it.

And if this is happening to me, then it’s for sure happening to lots and lots of other people, contributing to that accelerating convergence we reviewed earlier in the Singularity section.

Inverse KB Law

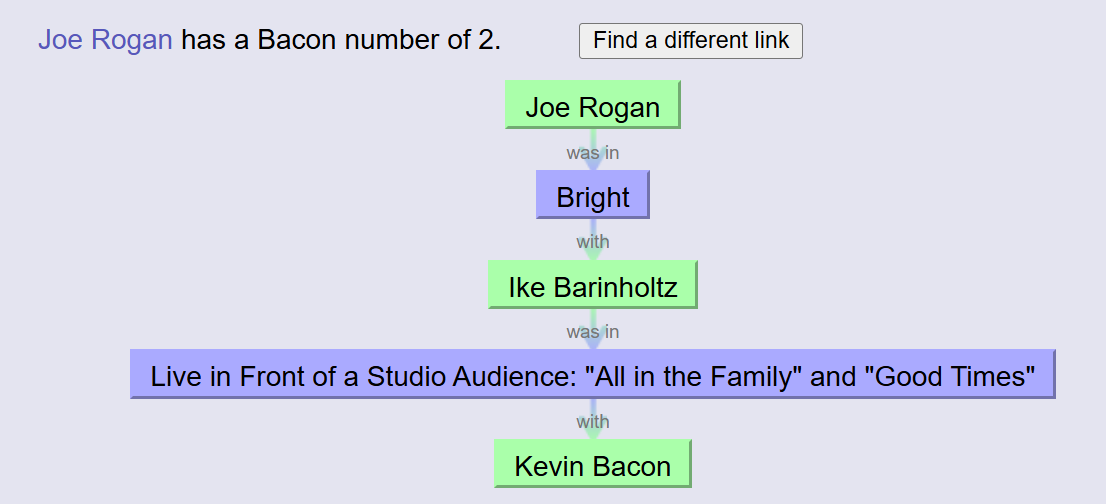

Bacon’s Law, aka Six Degrees of Kevin Bacon, or Six Degrees of Separation, is based on the idea that everyone on the planet, all 8.3 billion of us, can be connected through a chain of no more than six or so individuals who’ve met or worked together in real life.

The Kevin Bacon version simply introduces him as a famous actor that, in theory, everyone in Hollywood is just a few hops away from acting with him in something.

It’s a fun game that shows just how small social networks can turn out to be. But here’s the thing - the key to the game is that it’s based on the presumption that any two people in the chain spent at least SOME meaningful amount of time together in the same place.

For me, I’m not an actor, but my chain to Kevin is just three people long. A long time ago, I got to meet and chat with Joe Rogan when he came to a local comedy club in Austin. According to “The Oracle of Bacon”, Joe is only twice removed from Kevin:

Note: I’m not TOO far off from claiming Joe Rogan. I wasn’t just vaguely aware of him or his podcast, or just sat in the audience at one of his shows. I met him in the lobby. We shook hands, we talked about his old BBS forums, we took a photo together.

Anyhow, what I’m proposing here is that technology is an inverse to Bacon’s law by increasing the air-gap between individual human beings.

Let’s expand on that.

First, AI doesn’t get full credit. The internet, with its social media, virtual spaces and groups, began creating these gaps decades ago. As a result, the first inevitable casualty was casual friendships.

Used to be, if you didn’t want to be bored, you had to go somewhere to meet people - the bar, the park, work (to some degree) or some hobby.

Now, all someone has to do is join a Facebook Group or a Discord Server and engage with a stream of entities bustling about liking and sharing their experiences, but who have never met in person.

Okay cool, so people have less “real” friends, meaning we’re down to two in-person circles left to harvest connections from: Home and Work.

Home is the final frontier, but work is about to become the next casualty.

Some people (mostly employers looking to justify their property leases and tax incentives) will blame this death on “work from home” as they attempt to muster (or martial) their employees to return to office, under the auspice that it will promote that human-to-human interaction and collaboration that’s oh-so precious.

Those same organizations are actively investing millions and billions in ways to reduce their number one operating expense: humans.

We aren’t even at AI yet; this is just basic automation and process improvement that’s been handing out six-sigma belts to employees for decades.

“Okay, so it’s greedy corporations and this dastardly social media thing that’s dragging us towards isolation?”

Nope, we don’t get off that easy. Most of us are practically begging for it, in one way or another.

Here’s an easy chain:

Do you leverage ordering apps to deliver literally anything to your home?

If so - How much human face time is reduced by ordering rather than going into the restaurant or walking through the grocery store, or hunting through a department store, actively shopping?

And with that interaction potential reduced to just one single driver - how many people still click the “contactless delivery” option or stonewall the guy ringing the doorbell until they leave the items on the porch and drive away?

Yeah. That’s what I mean.

That thin thread - that last bastion of “Hi how are you, thank you so much for delivering [x], have a great day!” is about to be abstracted further through robots and delivery drones.

And some of us won’t even be the ones to bring the delivery in anyways; our affordable robot butlers will instead, saving us the potential “howdy neighbor!” we otherwise might encounter standing on our front porch.

That’s the Inverse KB Law. Fast forward past the robots, the drones, the Rufus shopping assistants, the “Ok Google” and “Hey Alexa” action skills, and you’ll find a network effect that might require six hops just to get to the next real person, let alone Joe Rogan or Kevin Bacon.

With every interaction we willingly outsource or abstract through some AI middleware, the further away we get from each other.

At the limit, at the furthest stretch of science fiction, a person’s direct network is reduced to two - themselves, and a primary AI which acts as a trusted interface to the world around them.

And if we want to get really weird with it, this pairing might end up existing inside the same shared skull for some people. (a-la Neuralink)

Paired Programming

Rewinding a bit, a few key things have transpired over the past two months that really rose above the noise to catch my undivided attention.

The first was OpenAI’s Codex and their Agentic capabilities, which allow ChatGPT to work on its own, and even build and test code within its own sandbox, without you even having to maintain an ongoing chat session or paste things into a code editor somewhere.

I gave it access to a safe code repository, and immediately saw value as it performed in depth code reviews and provided valuable feedback directly on the pull requests.

It did this on its own, at my request, and notified me when it completed its task. This was the first big indicator for me.

This evolved further with the Agentic announcement which hit my inbox while I was at a vendor conference. I was in a session with a prominent staffing company who was announcing their new “build your own Agent” capabilities.

In the break-out session, they had a demo server that allowed users to register, log in, and then create forms and flows, attach agents, and orchestrate actions.

The goal was to make a quick AI survey agent that asks questions, captures responses, and emails the results. I ended up in the peanut gallery as folks scrambled for an available demo laptop for the exercise.

While everyone else worked through the handout packets, I thumbed a simple instruction to the new GPT Agent:

Register me at this URL using this email address and let me know when you’re done.

A few seconds later, I had a log in.

My next prompt:

Use that login and orient yourself to the tools and options available to you once you’re in. It’s a visual form building app. Your goal is to create a simple conversational AI flow that

1) Gathers basic information about the user (email, address, industry, profession)

2) Presents the user with a series of 5 survey questions about whatever topic you want.

3) recaps the users answers and allows them to correct mistakes or change their answers and

4) confirms their final responses and calls the Email tool to send them along. Use my email address as the “send to” for this flow.

I closed my phone up and watched everyone else work through their packet of instructions.

About five minutes later, I get a ChatGPT notification that it finished creating the survey flow. It was 99% functional.

The only issue it had was one of the UI elements required an odd mouse‑hover pop‑up to appear to make a selection that it struggled to notice. Upon filling in that last gap, I had a working flow while most of the people in the demo were still dragging and dropping components into their workspace and creating the various flow associations.

I sat there for the remaining 10-15 minutes of this 20 min exercise just kind of.. blankly staring ahead at the people still working through the exercise, and trying to imagine a future where any of this still occurred.

That very real moment, when a semi-autonomous agent was able to roll into a completely novel environment, one for which no pre-training was possible, and simply ‘figure out’ how to create an app nearly perfectly on a single set of instructions and in just a few minutes, was a moment that will forever weigh on me.

The Prodigal Son Returns

Fast forward to the beginning of 2026. I know I’ve had my differences with Claude in the past, but there were all these reports coming out about how Claude Opus / Claude Code / Claude CLI was quickly becoming THE dominant Enterprise coding AI, and this adoption was responsible for their explosive rise to a billion dollar run-rate.

So, I gave it a shot, installing Claude CLI which allows it to run directly on my personal engineering computer, where I interact with it via the “CLI” or “Command Line Interface”. For those not familiar, this is the “DOS window” you see if you run “cmd” from your windows desktop.

Just as with OpenAI, I gave it narrow access to just one project concept I wanted to test it with. But here’s the difference: While Agentic ChatGPT was running “off in the cloud somewhere” on a virtualized sandbox, Claude CLI was running right there on my computer, as if I literally handed it the keyboard and mouse.

Each time it needed to run a potentially unsafe command, it prompted me for permission - where I could choose whether to block the action, allow the action once, or always allow the action within the working folder.

What transpired over the following 2-3 week period was nothing short of game changing. We stood up an entire functioning application.

Like, the entire thing.

Secure user registrations, email verification, password reset, user content management, an entire data layer, an entire object Cloudflare D2 storage layer, nightly backups, CI/CD pipeline deployments with smoke tests and validation checks, a complete data schema deployment process with implementation and backout steps, admin features for managing the app itself as well as users, anti-bot protection, security hardening, GDPR “right to be forgotten” features, custom logos and branding, server and database health checks, diagnostic scripts. Everything.

And the three primary skills I brought to the table… er… command line… were:

A systems-thinking, architecture, and product owner mindset.

The ability to clearly and concisely articulate requirements.

The patience and focus to spend a few hours a night working with Claude as a paired programmer.

The surreal moment was when I forgot I still had OpenAI Codex wired into my same repository, and for one of the deployment bundles (referred to as PRs or Pull Requests) - Codex agent performed an automatic code review on the changes and pointed out a few potential security risks to plug.

Like, it literally leaves comments on the discussion thread and cites the code where the issues exist.

Seeing this, and just as casually as I would have mentioned the feedback to a real world colleague, I told Claude:

“Hey, it looks like Codex left some feedback on the PR, check it out and see if it’s something we need to address?”

Claude then agentically read through the comments, agreed that the issues raised were “great finds” and then proceeded to patch the issues and attach the updated code to the PR and even respond to the comments in the discussion.

The fixes received a “thumbs up” emoji from Codex.

I cannot overstate how incredibly powerful this is, and this kind of technology is in the hands of tens of thousands of people out there that are more capable, more articulate, and more importantly, have more time than I do.

But going back to our Inverse KB Law - just look at where I find myself: The only human being on a “team” of three, designing and deploying an entire application from ideation to beta testing in just two weeks.

The back-end data design and API access layer alone would have been a 1-2 week estimate for a team of developers where I work.

I would then be impressed if they could get it to the current level of polish and completion in 4-6 months.

This is why I say the singularity won’t be forced down by governments or corporations. This is why I say we’ll practically beg for it, because I cannot in all honesty sit here and say I would trade this level of capability, focus, and conscientious development for the hope that I could convince a team of real people with the right combination of skills to slog through developing my idea over a 10x longer period of time with the same level of quality.

But just when I was about to jam a flag into the dirt and declare “we have arrived”, the simulation said “Hold my beer” and hit me with one more advancement that I only just started hearing about over the past few days.

Clawdbot enters the chat.

Head to YouTube and do a casual search for just one word: “Clawdbot”

Here’s the kind of results you’ll see:

Why People Are Freaking Out About Clawdbot

ClawdBot is is out of control

I Played with Clawdbot all Weekend - it’s insane.

Clawdbot Changes Everything

Clawdbot is the most powerful AI tool I’ve ever used in my life.

Clawdbot is everything I was hoping A.I. would be

I just replaced myself with Clawdbot

Clawdbot is an inflection point in AI History:

(If you end up watching the featured video above, pay close attention to the very ironic sponsor advertisement at ~ the 20 minute mark.)

Remember earlier, when I said I had to bring three primary skills to the table when I began working with Claude CLI as a paired programmer?

Well, Clawdbot solves #3 from that list- the need to sit down and interactively code/interact together on the system to get things done.

You install Clawdbot on a system and, like Claude, it has access to perform any and all actions you can from that same PC. This includes opening and editing files, connecting to websites, connecting to code repositories, etc.

But the key is, Clawdbot can also be wired into Messaging apps, like Telegram, Whatsap, or Slack, which it uses as its communication gateway to you from anywhere you want.

So, rather than me having to “check in on” my coding buddy to see if he’s done working and waiting for next steps, Clawdbot will just… text you to let you know things are done, or to ask you to resolve a design issue, or whatever else it needs.

The second thing Clawdbot does is… basically… run 24/7. It maintains its own internal state and from what I can tell, even has some kind of self-orchestration capabilities, as I’ve seen people tell it to give them status updates or review their emails with them every morning.

To contrast: On average, I can have a brief conversation about next steps with Claude and it will go off and churn out a bunch of work over a roughly 5-10 minute stretch, which in many cases would be like 1-2 hours of work if I was coding things myself, even with a “copilot” type setup inside of Cursor or VS Code.

Depending on how complex our sessions are, I also have to let Claude go through what’s called a “Compaction” - where it summarizes everything we’ve done in that session so far and basically “sleep” to recover to a new context window.

Clawdbot appears to just be… always on, always ready to work.

You might be asking: Which AI is under the hood?

Well, you can pick which one you want, but many people are electing to use… you guessed it… Claude Opus (the same AI I’m using for Claude CLI)

Let this sink in given everything we’ve talked about so far.

Final Thoughts

Like I said, I wasn’t going to pull any punches.

I lied a bit, though, about the lack of a silver lining. Every piece of technology we use has a dual purpose potential, and this is no different.

It’s worth saying that by essentially eliminating the barrier to software development, we the people (consumers) finally have a chance at fighting back against the constant enshitification that SaaS companies have been bending us over with as they give us feature rich software, then slowly take away or paywall them with ever increasing subscription fees.

And while I’m sure many will use this to create apps and programs that replace jobs, increase individual isolation, or eek out subscription revenue - there potentially many who have aspired to create things that bring us together, give us the free time to unplug and spend more time with the real people in our lives.

The ability to make your creative ideas a reality has never been easier.

If this is you - then go build.

Very good read. I too feel like we are on the cusp.