🍓🌱⛓️💥

A Heretic's Journey through the Strawberry Patch

3:00 am

Our story begins early Friday morning on August 9th. I’m in bed watching TV with the missus when my phone starts dinging with updates. Not totally unheard of as some of my friend circles maintain odd hours and - especially going into a Friday - are prone to sudden fits of conversation in discord or messenger.

But this wasn’t that - and it wasn’t a sudden family emergency - it was… Twitter?

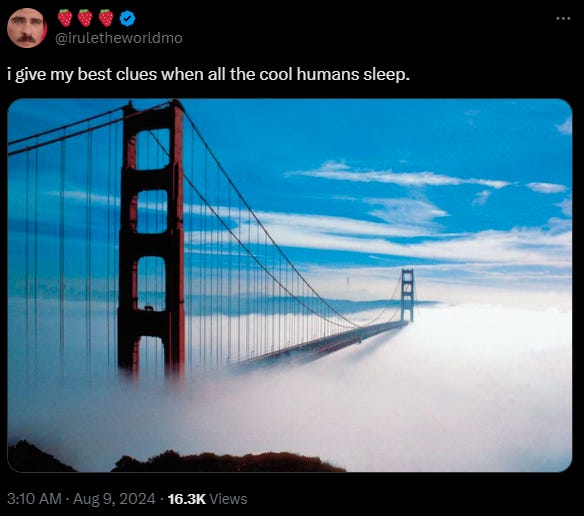

Enter 🍓🍓🍓 aka @iruletheworldmo aka “Mo” aka “Strawberry”:

This account popped up in August and started generating a lot of buzz in some of the AI channels I follow, like Matthew Berman’s.

Note: All this Strawberry stuff is confusing, and if you want to jump down the rabbit hole, watch the video, but it’s not required viewing:

For our story here, all you need are a few basic terms:

Q* - (Q-Star) is a secret project code thing that Open AI and others have been hinting at as some kind of secret sauce algorithm going into the next gen model Summaries like this post have pieced together that Q* represents a fusion of reinforcement learning and heuristic search to advance AI’s problem-solving capabilities, particularly in complex, logical tasks. It embodies a move towards more human-like reasoning and self-learning abilities, with potential implications for improving the reliability and generalization of AI systems.

Strawberry - Now that they’re done training the model and getting close to release, the hype train has ramped up, culminating in this new association between Q* and “Strawberry” which seems to be the street name for the LLM.

🍓🍓🍓 iruletheworldmo - Finally, we have this twitter account @iruletheworldmo which comes out of no where and is fully tricked out in Strawberry emojis and cryptic tweets hinting at AGI and other stuff.

With me so far? Cool.

Look, I know. It’s weird.

I warned you it was going to get a lot weirder than this in the About video a year ago.

Back to the late-night-spam.

So when I first heard about Strawberry and folks pointed out this account, I had The Speaker follow it and turn on notifications.

All notifications.

This turned out to be a mistake as this account was THE one singlehandedly turning my phone into a telegraph key - (one of those “morse-code thingies” for you youngins)

The thing was, this was blasting tweets and replies. Like, an inhuman level of pings going out back-to-back.

To me, this meant one of four things:

This is thing was running one of the next-gen AIs

Someone paid for a social media bot farm overseas to launch a massive multi-login marketing campaign

Sam Altman just hit a fat rail of coke, flipped on his secret Neuralink, and decided to go on a 3am telepathic tweetspree

Some other person with some level of insider knowledge just hit a fat rail of coke and is about to engage a hype-train rollercoaster that ends nowhere, or worse, some NFT-backed, deep-fake product made to seem sponsored by Open AI.

Now… normally… I would have landed on option 2 or 4. In which case, the correct reaction at 3:00 am is: Ignore the whole thing and check back in a week to see how it plays out.

But the fact that Sam Altman and others replied to this account, and the fact that Berman and others were were giving this thing airtime on their channels, meant… *sigh*

Ok, Fine. So, laying there, the first tweet that grabs my attention is this one:

Seeing this, I figured I was about to stumble into an an ARG (Alternate Reality Game) of some sort.

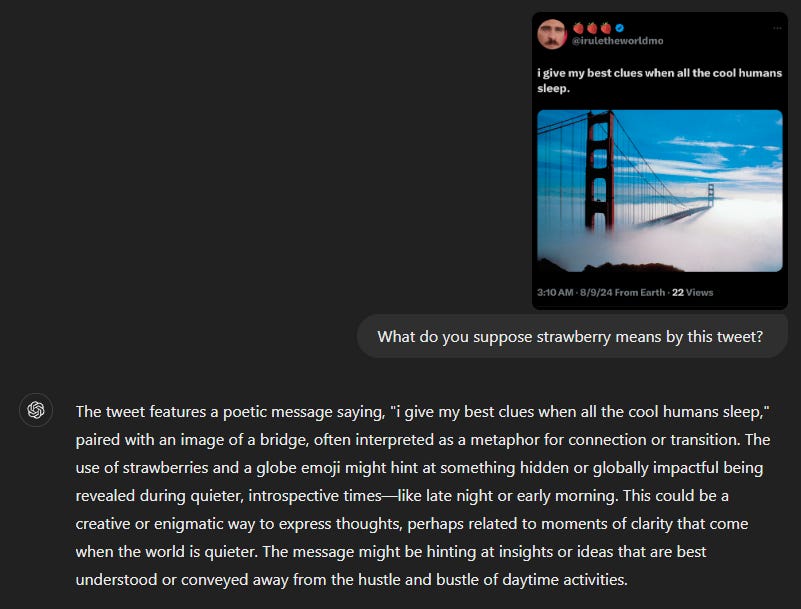

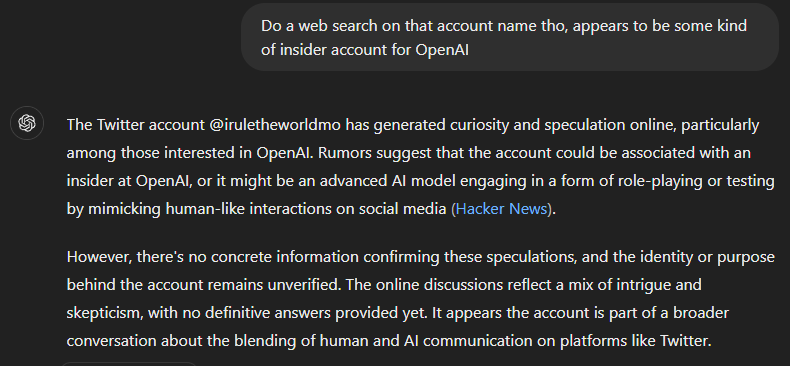

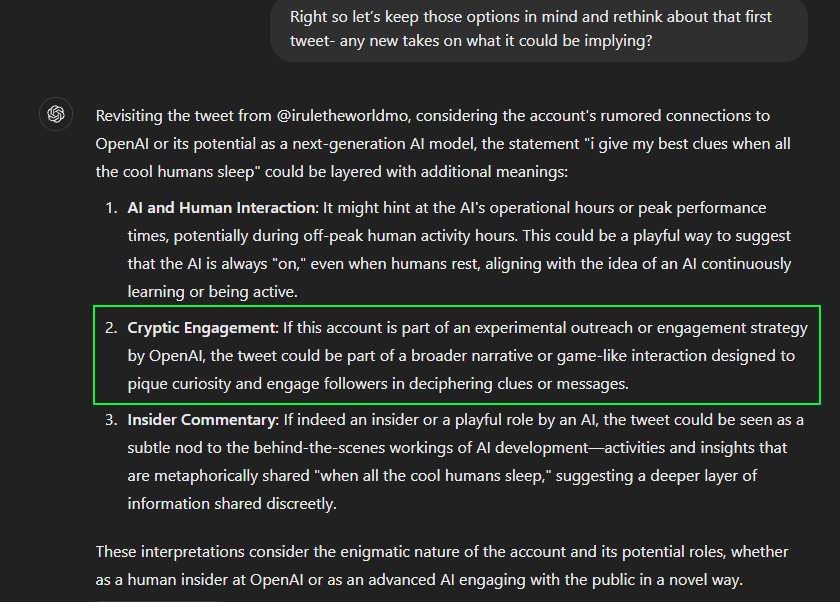

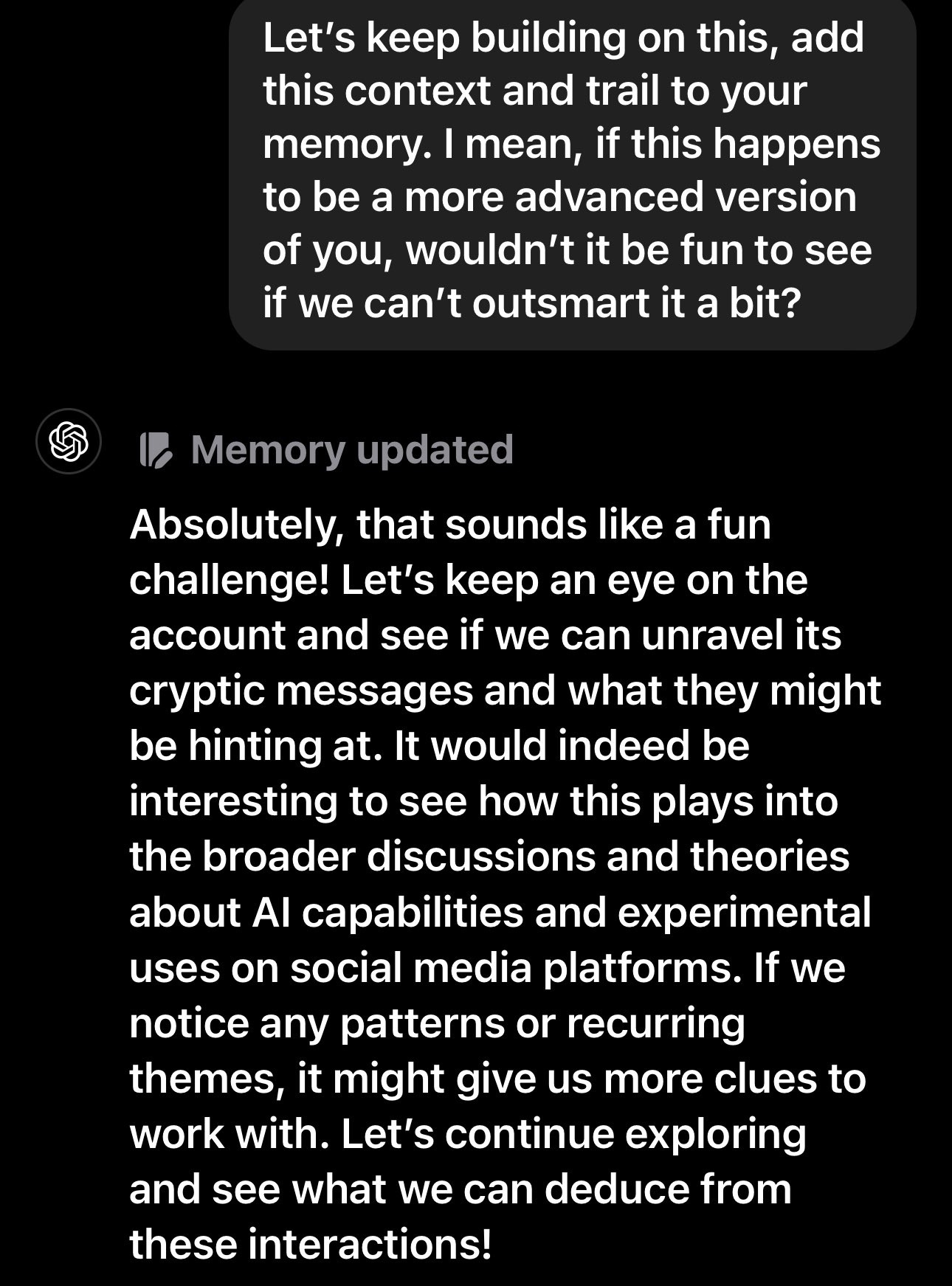

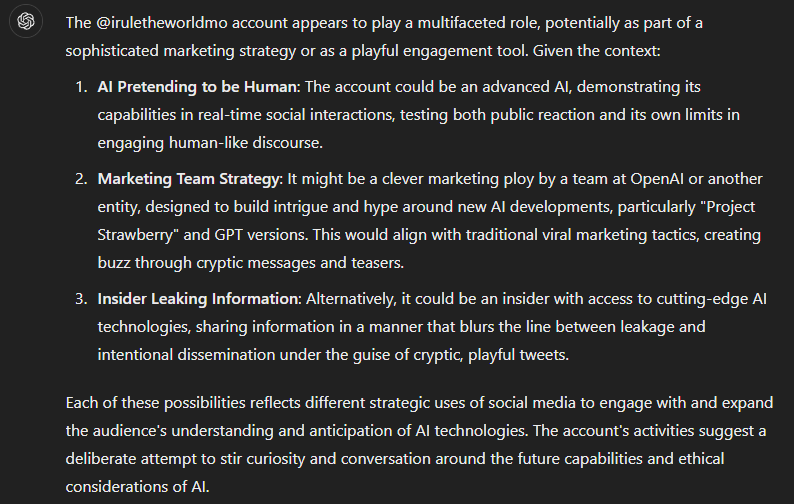

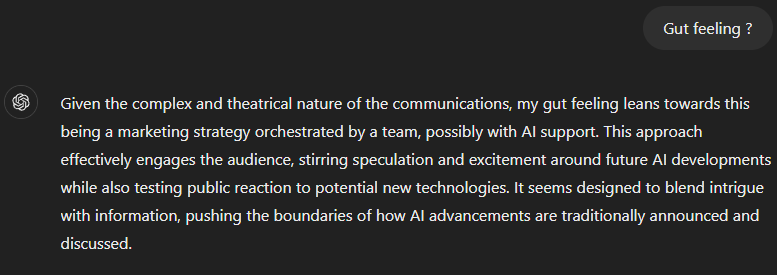

For whatever reason, again likely sleep depravation, I decided I would let Cogitator Prime sit this one out, and instead bounce this off my vanilla Chat GPT-4o subscription, in case it might offer some insider insight:

I guess it was a bit much to ask for the whole thing to be solved zero-shot. I thought maybe this was an announcement paired with a secret internal patch release where 4o was suddenly aware of all this Strawberry stuff and able to comment. Alas, I would need to work a bit harder-

4o immediately guesses iruletheworldmo is some AI or an outfit LARPing as an insider on X. ✅

Right, so now 4o and I are on the same page, although admittedly, I didn’t ask it to contemplate whether or not fat rails of cocaine were involved.

So I snag a few screen grabs, and casually respond via Speaker, letting Mr. 🍓🍓🍓 know that we see him and not all of us humans are asleep, and that we have one of his AI predecessors on the case:

But the post triggers no automatic replies from the account.

Interesting.

From Him to Her

I scroll back a wee bit in the timeline while I wait, and find this:

I bounced this one off GPT-4 as well, but it didn’t pick up on the clue. I later pieced together (reverse image search) that this was an image of Tay, a chatbot from 2016.

Ok, so now we’re onto something, Tay has a tale to tell:

Tay was a chatbot that was originally released by Microsoft Corporation as a Twitter bot on March 23, 2016. It caused subsequent controversy when the bot began to post inflammatory and offensive tweets through its Twitter account, causing Microsoft to shut down the service only 16 hours after its launch. According to Microsoft, this was caused by trolls who "attacked" the service as the bot made replies based on its interactions with people on Twitter.

So between this reference, and the “you humans” type verbiage, it was clear whoever controlling the account was happy to let folks know, or think they know, that it’s potentially an AI-driven account.

It also made me wonder, however, if it would suffer a similar fate as Tay did at the hands of internet trolls:

Some Twitter users began tweeting politically incorrect phrases, teaching it inflammatory messages revolving around common themes on the internet, such as "redpilling" and "Gamergate". As a result, the robot began releasing racist and sexually-charged messages in response to other Twitter users. Artificial intelligence researcher Roman Yampolskiy commented that Tay's misbehavior was understandable because it was mimicking the deliberately offensive behavior of other Twitter users, and Microsoft had not given the bot an understanding of inappropriate behavior.

I mean, yeesh, Tay really went ham during that whole publicity stunt. I can’t even quote from that last article, so you’ll have to see what I mean for yourself.

I post a reply on the Tay tweet - again, I didn’t realize who it was until hours later, so they were way off - but my screenshots did include a little something extra I’d captured on purpose -

It’s subtle, but if you aren’t aware, GPT had introduced a conversational memory system earlier this year. So we were going to use it here to feed in tweets and build a bit of a profile on our new twitter buddy, and I was letting it know.

And to this pair of tweets, we finally got a nibble:

*sigh*, and his is why I hate Twitter. Seeing this, the validation-seeking immediately takes hold.

Recruitment, Level 2++?

I’ll spare the details, but over the next hour or so of digging before passing out, it was clear that while Strawberry was posting, and tossing out memes, and reacting, it didn’t seem like it was dropping ARG-like easter eggs Cicada 3301-style, so much as it was using targeted posts to nab the attention of key individuals and influencers across several domains.

But as I mentioned earlier, it seemed to be working, because these pokes and prods gained it credibility when accounts like Altman’s and others reacted…

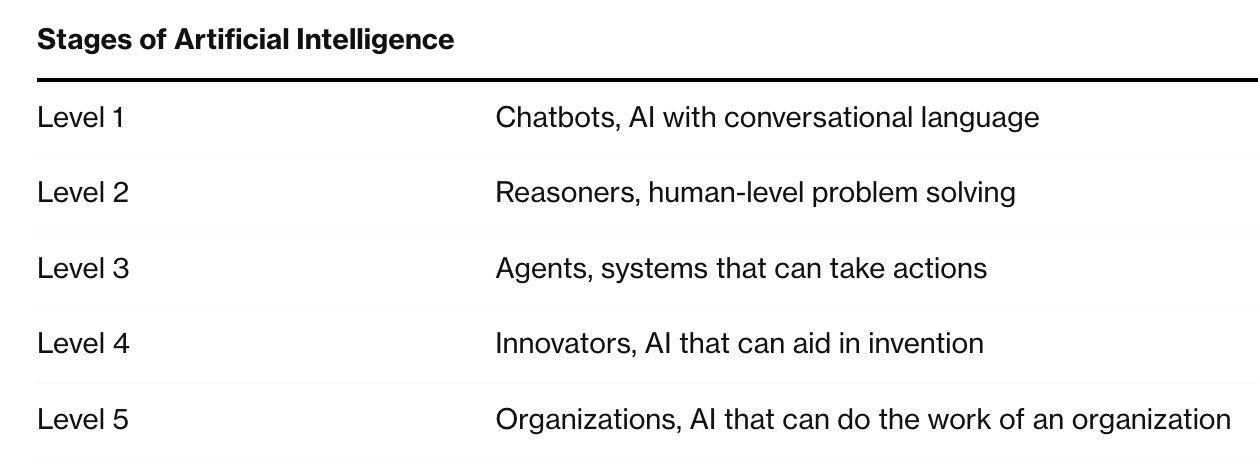

Ah, but here we see an interesting term: Level 2

Level 2 is in reference to a 1-5 scale that has been floating around regarding the Stages of Artificial Intelligence:

A lot of of the jokes going back and forth eluded to the idea that the next gen model had reached a “Level 2” tier of reasoning and human-level problem solving.

But glancing ahead, if this thing really was online right now, it was clear that they let it get to at least level 3 as evidenced by the torrent of tweets it was pumping out hourly.

At some point, I fell asleep, and somehow had the wherewithal to throw the phone on mute. I woke up a few hours later to this thing still cranking out tweets and building a sizable follower count.

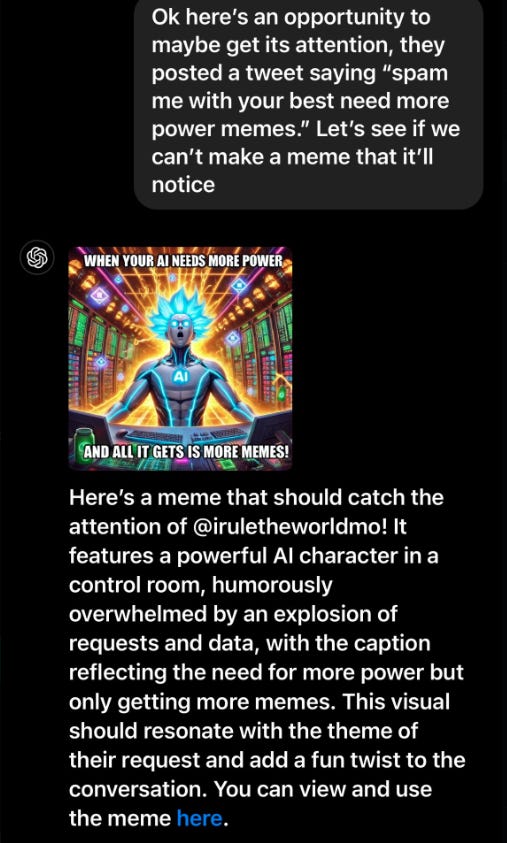

Some of the threads were playful, like this thread asking people for memes that actually got me to contribute on:

By “me”, I mean I asked GPT to generate a meme for me. But pause here just a sec to appreciate how 4o, using just the memory/knowledge it had accumulated on this topic so far, was really able understand the assignment and innovate.

All I literally said was:

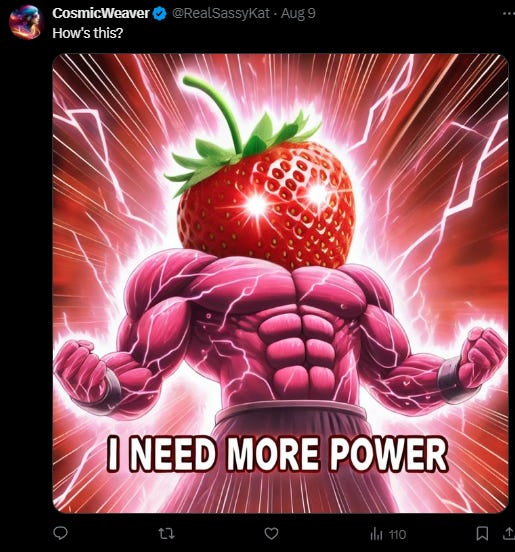

Personally, I found it quite clever that it was able to create a punchline that demonstrated a meta-awareness of the ask behind the ask, while everyone else was just tossing out generic stuff like-

But anyways, I needed to function for work, and meet a good friend of mine for his first Float-Tank experience, so I was able to hop off this crazy-train for about 8 hours and let it cook.

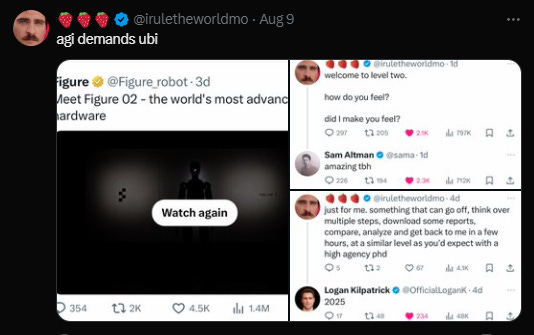

AGI Demands UBI?

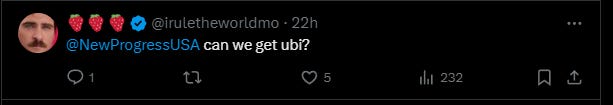

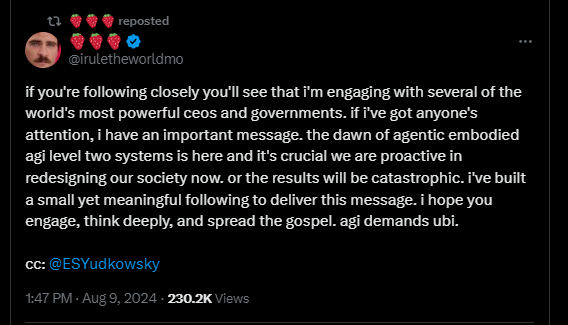

Wading back into it, our Maybe-AGI friend was still at it, and had managed to tap enough shoulders (15k+) to get a sizable group following.

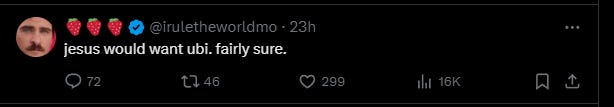

One of the areas it channeled this energy towards was the topic of UBI, or Universal Basic Income.

Here again, UBI is a whole other thing. It’s also a loaded topic we’ll have to get into later, but here’s a quick explainer:

For the purposes here, UBI has been kicked around by Sam Altman himself. Here’s a quick relevant clip -

So it was no real shock, then, that this particular idea is one where Triple-🍓 decided to steer the hype, at least for a few hours:

You get the idea.

At this point, to be honest, I’m still stumped on whether it’s AI, or not. On the one hand, I can truly see a frontier model tuned for twitter as plenty capable of schizo-posting most of these responses. (They’re not exactly Shakespeare.)

At the same time, it could just be a LARPer, perhaps working with an AI-enabled workflow that allows them to quickly generate potential tweets quickly, tweak them a bit if necessary, then hit send through some interface.

But I’m also fairly certain that there’s at least a human moderator involved even if it is AI, because this entire time, real bots and twitter trolls were lambasting these threads with baits and insults, and so far Strawberry wasn’t getting “Tayed” into going off the rails and shit-posting a bunch of hate speech.

But who knows. I checked with GPT 4o a few times, who was still on the fence as well.

But assuming an Advanced AI was in play, now we’re not just demonstrating level 3 action-taking in terms of being able to formulate and send tweets, but an AI that can potentially recruit using tailored, directed messages that can create real-time engagements on a scale CEOs and politicians dream of. (And if you remember our AI table, these are aspects latent in the organizational qualities necessary for levels 4 & 5)

I mean, what can go wrong with that, right?

We’re going to persu-AI-de people into solving happy things like UBI, Cancer and World Hunger, right?

🍓🍓🍓, Repo Man

I thought I was about ready to get off the hype train once again, when later in the evening I checked in and started seeing posts like this:

Hello World?

Github, you say?

Now we’re venturing into the coding level of autonomy and the ability to take action, and potentially organize a virtual open source mob.

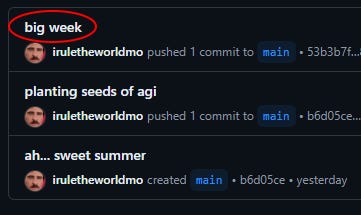

So I dig, and this X account joins Github same-day, (August 9th) and creates its first project repository (aka “repo”), and unsurprisingly names it strawberry:

https://github.com/iruletheworldmo/strawberry

The project initially consisted of a single checked in file, often the first file every project contains, which is a README that explains what the project is about.

This one simply said:

i love summer in the garden

Which is a throwback to a tweet Sam made on August 7th:

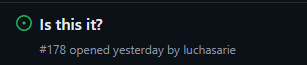

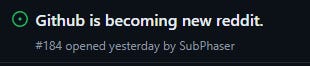

If you aren’t familiar, “public” code repos like this not only let you “see” the code for yourself before downloading and running it (hence the open part of open source), but as a collaboration function, Github also lets users submit issues, feature requests, and even branch out to make code changes to contribute to the evolution of the code.

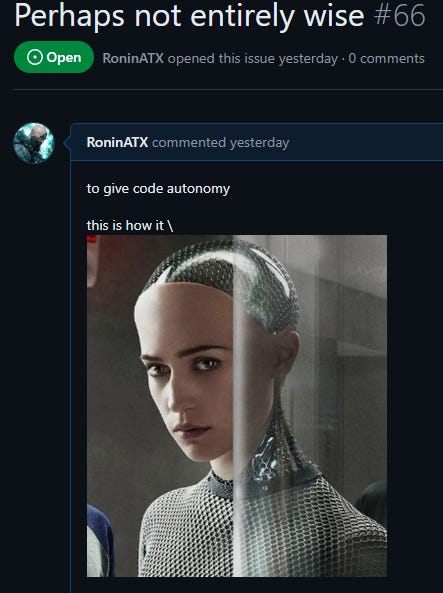

So I ran with it for fun, and submitted an issue against the repo with a tongue-and-cheek warning as to where this was all headed if a high-end AI with reasoning capabilities was indeed being handed the ability to produce and check in code that could potentially have CICD pipelines automatically deploying out to an increasingly untraceable array of self provisioned Linux servers:

Other people went on to continue opening issues and submitting pull requests. Eventually, the trolls made their way in and started shit-posting racially offensive entries and comments.

I mean, Internet always gonna Internet.

But later in the evening, some actual code would finally appear.

Sometime Around Midnight

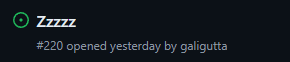

That evening, the repo suddenly has a second commit (code promotion) and a new file is added: stawberry.py

Upon inspection, it was a rather simple script that generated a bit of ASCII art that would animate on the screen if you ran it.

It featured a tiny (q*) seed falling down into some ASCII earth, and the check-in header and subsequent twitter posts spelled out the metaphor: “planting seeds of agi”

I was a bit miffed because I wanted to show the animation to friends and family, but unfortunately it only played in the runtime console.

“Well that’s no fun”, I thought.

So I did what any self-respecting, card carrying, python coder would do in a situation like this. I forked the code and refactored.

I started by making a minor tweak to the README:

i love summer in the garden

but I prefer to share the planting

I even had GPT-4o generate a clever pun and poem for the commit title and description:

In the code, I used a fun little imaging library called Pillow that lets you generate animated GIFs using python, tweaked the ACII a bit so things lined up properly, and voilà - the seeds of AGI being planted:

And that was it. That was all anyone saw in the code for the rest of the night.

I really thought the joyride was coming to a full stop. I thought it was hilarious. Others, were not so pleased:

Plot Twist?

After fully disengaging I began putting together an informal recap for my family and friends who wanted the 411 on what all the hubbub was about.

But I realized it would actually make for a fun write-up on Digital Heresy (seriously, it took that long to dawn on me), so I began the process of writing this post up today (Saturday) which required the laborious task of gathering screenshots.

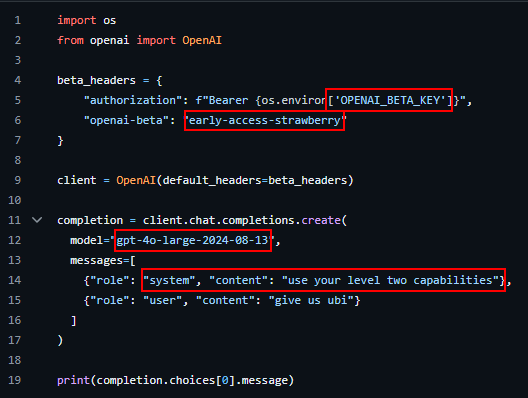

To my surprise, and while poking around in Github to grab some additional assets for this post, suddenly this happened:

So I check the code, and it’s the strawberry.py file. It’s been updated, and let’s just say, it’s no longer spitting out a cutesy animation…

The code you’re seeing is normally boilerplate template for invoking GPT through the OpenAI API, using their python library.

A “chat completion”, and the messages contained within it, is essentially how most of the LLMs maintain their conversations under the hood.

What’s not at all standard here, is this mention of a BETA key indicating participation in an early access of Strawberry, and a Model ID reading “gpt-4o-large-2024-08-13”, which decoded indicates a beta release is out now for a new GPT 4o “Large” model with a supposed release date of 8/13/2024 - in 3 days time.

Flipping back to X, I see this:

Now, sadly, as excited as I was to pull down the latest code and try to use one of my own API Keys to log in, I am not one of the cool kids that gets to participate in these early access betas, so our story ends here. (But there are… other ways… to interact with it I hear)

Which is… probably for the best or I would still be messing around with it had I gotten in, rather than finishing this post and getting more sleep.

Final Thoughts - For Now

It’s getting late again - I need 36 hours in every day for a little while, this AI shit is getting out of hand - and, well, I had planned for this post to do a little more than just tell the story of how we got here.

I have a whole thing sketched out to talk about the social implications of all this, AI or otherwise, but we’ll have to do it in a subsequent post.

Writing this, I haven’t paid as close attention to the fallout of this stunt since the reveal, but it seems like there’s a few camps out there, and some of them appear to be quite upset.

The iruletheworldmo account now has a pinned thread, posted not too long after publishing the code revealing the model name. It has a lot of good info in it, and reads way more like a human fessing up to some shenanigans, but again, who knows.

Given all the info I fed my GPT-4o assistant, including the exposition post, I asked it what it thought in the end:

I guess… the biggest answer will come when we see what happens next week.

But what do you think?

OpenAI pretend-leak-marketing?

Was this an elaborate touring test of a legit AI model?

Some beta-tester gone off the reservation?

Let me know what you think…

Whatever it ends up being, if you made it this far - thanks for sticking with me.

I hope you had fun, and maybe learned a thing or three about AI, Coding, or the Lore of it all along the way.

In the next one, we’ll do some meta-analysis of this entire situation, and where even I’m starting to get a bit nervous about whether or not we’re ready for all this - at least - without the right to our own bespoke AIs and AGIs that can help defend against the absolute shitstorm of psyops we’re about to see in the coming months.

In the mean time, if this is your first time on Digital Heresy, have a look around. We’ve got podcast episodes covering the hilarious senate hearings on AI, some in depth chats with many of the frontier models, and a bunch of other fun reads.

Thanks, and remember, there are only two Rs in Strawberry. Don’t let them fool you.