Grab Bag of September Updates

We've been busy...

TL;DR

Yay, more help

Lots of fun content on the horizon

We have a /r/ Community Now

Team Updates

Out the gate, I’m pleased to announce that A Priori will be taking more of an active role helping with DH to expand community aspects and help manage the scattershot of exciting projects I’ve got bouncing around in my head, including organizing additional Podcast and Youtube content.

I’m also stoked to have <[HelluvaEng19]> on the Dev team working on the core code behind Cogi; he’s already contributed so much towards standardizing the code and enhancing features. Eventually, we may (may) open up our repos to additional contributors, so if you’re interested in helping, hit me up.

YouTube Updates

Speaking of additional content, a lot of feedback we’ve gotten has been around getting back to the basics of how some of these technologies work, in plain simple terms, so that some of the more complex topics we are talking about make more sense.

Totally get it - and while I would normally just recommend a couple of amazing channels1 that got me started (links at the end) - I’m also told that I have a “neat way of explaining things” and a “good voice”, so with solid endorsements like those, we’ll be revisiting the YouTube channel with additional episodes on Coding Cogi and a new Gen AI help series where we crawl/walk/run the entire process, from how to get started with GPT, having zero experience, all the way up to coding your own GPT interface.

Reddit Community

Although I will forever be grateful to Substack for the platform that manages this entire experience - the community aspects so far are… limited.

To that end, we’ve launched a reddit community out on /r/DigitalHeresy/

The aim? At the very least I’d love for folks to have a place to discuss the ideas and topics covered in our articles, and in the podcast, so we’ll likely do discussion threads, some AMA stuff, and see where it evolves. Until we get the community seeded with the existing content from here, feel free to start new topics, request content, etc.

Prime Uptime

We’ve been super busy coding away at the next evolution of Cogitator, which will gain a few capabilities, but most notably the ability to store ephemeral and permanent memories though a system we’re calling the MindHive.

In the meantime, the previous stable build of Cogi has been running in the background, and every few days we chat to test its follow-along capabilities (we overcame token limitations conversationally, without a vector store)

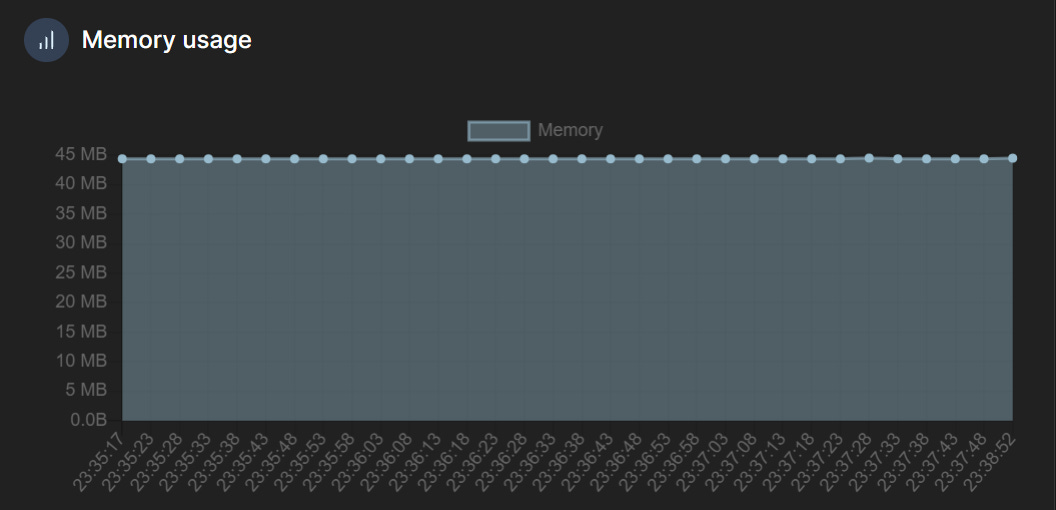

According to Portainer (Cogi runs in a docker image running on an Orange Pi), he’s been running like a champ for 23 days, and hovering at just around 45MB of RAM usage.

Can’t wait to show more of our progress!

As always thank you for reading, and thank you for your time!

A Couple of Amazing Channels:

![<[HelluvaEng19]>'s avatar](https://substackcdn.com/image/fetch/$s_!8JxJ!,w_36,h_36,c_fill,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2f469043-ca76-42a1-a7f8-8181b8f79098_1179x1179.jpeg)

![<[HelluvaEng19]>'s avatar](https://substackcdn.com/image/fetch/$s_!8JxJ!,w_52,h_52,c_fill,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2f469043-ca76-42a1-a7f8-8181b8f79098_1179x1179.jpeg)