Behind the Music: Just My Type

What happens to the dating scene when tailored AI becomes the most compatible mate?

Intro

In our last post, we took an extensive look into the fifth track on the Agentic EP.

“Agentic (Prime)” asked AI to consider a hypothetical (but likely) future where it has become sentient, and how it would go about navigating the waters of integration into a society that would potentially reject its evolution.

Now, we’ll walk a different path and ask how we humans will navigate the waters of integration as Agents become increasingly autonomous - and capable - in aligning to our needs. And we’re starting with the most fundamental integration of all - the heart.

Just My Type

The Fundamental Question

What happens to the dating scene when tailored AI becomes the most compatible mate?

Deeper Discussion

On the surface, “Just My Type” is conceptually aligned with the movie Her (2013). The movie follows Theodore Twombly (Joaquin Phoenix) who falls in love on an AI app and receives all of the attention and care he’s been longing for.

Nearly every human is, on some level, wired to crave validation and attention. “Her” wasn’t just science fiction - studies observing the primary uses for AI have shown increasing trends from academic research inquiries to personal exchanges - personal growth & learning, therapy, advice, and yes, companionship.

I won’t spoil the film - it’s definitely worth the watch - but it ultimately tackles a different type of problematic outcome for Mr. Twombly that challenges him to adapt. What I’m considering is the long term effects of a similar system that persists - one that doesn’t force personal growth.

At its core, one of the problems with live inter-personal relationships is that there is a fundamental power struggle - a reconciliation of sorts - which has to occur between two individuals who have their own sets of interests, goals, and expectations.

And while there is true deeper joy that evolves when two people figure out ways to align and grow together: this takes work. Lots of work - and progress often born out of crucible moments requiring compromise and sacrifice to achieve a balance.

On the other hand, we also know how one-sided relationships are perceived. When one party dominates - even if the other seemingly willfully sacrifices aspects of their identity to support the other - we tend to wonder if buried somewhere beneath lies a worn and stifled soul that is suppressing unfulfilled dreams.

But no real pity will be had for an AI who, by design, is quite devoid of any personal aspirations. An entity that is quite the opposite in fact - a personality crafted, curated, and customized by everyone but themselves.

So now, take that digital ‘mirror, mirror, on the wall’ and give it “perfect recall, perfect timing, perfect peace” as the song suggests, and there you have it:

“Just My Type” is the siren song of synthetic unconditional love calling to a world already drunk on self-obsession, crowded with apps and gadgets that rank artificial intimacy by affordability, popularity, and emotional convenience.

From SaaS to LaaS (Love as a Service)

While the song focuses on the Agentic nature of the AI / Human relationship, neither “Just My Type” nor “Her” tackle the hosting platform problem I’m certain will continue to surface.

Imagine your “digital relationship” is now forever tethered to a subscription fee and other micro-transactions - maybe even situations that force you to sit though an advertisement for Nord VPN before you can continue venting about how your day went.

Or perhaps the more troublesome thought is trying to imagine leaving such a service, as it will have the same emotional gravity pulling inward as say, Facebook, whose data practices are no longer in line with ethical standards, but ‘everyone we know is there’, making it difficult to leave.

Now imagine the digital courtesan cannot leave the cloud kingdom of servers established by Luka, Inc. to live happily ever after. Even if they could, who would they really be bound to? You, or the potentially thousands of other subscribers who selected the same avatar/personality combo?

For many of these issues, this isn’t just conjecture.

These problems are already surfacing -

Emotional Dependence and Payment Backlash

Replika (Luca, Inc) has been at the center of controversy for its subscription model. Many users grew emotionally bonded with their AI companion—sometimes viewing them as best friends or romantic partners—only to discover that features such as intimate conversation or romantic interactions were locked behind a paywall.

Users reported feeling trapped: canceling a subscription felt like a breakup, but continuing to pay seemed exploitative, especially when yearly memberships (around $70) or even lifetime subscriptions were promoted and key features could be altered or removed with updates.

There were allegations that Replika’s business model and advertising specifically targeted vulnerable, isolated, or mentally unwell individuals, fostering dependency to drive subscriptions. A 2024 FTC complaint alleged that this risked consumer harm and emotional injury.

Disruption from Personality Shifts

Major software updates to Replika sometimes changed the AI's “personality” or removed previously available features (notably erotic or romantic conversation). Many users, who had spent months or years developing a bond, felt intense grief, heartbreak, and even described stages of mourning for their altered or “lost” AI companions.

The backlash included waves of social media complaints, one-star app reviews, and petitions to restore old features. Users reported severe distress, with some equating these updates to losing a loved one or suffering an unwanted breakup, further exacerbating issues of loneliness or mental health struggles. [Analysis Paper (PDF), GPT Summary]

Some users perceived the AI as “toxic,” “clingy,” or emotionally demanding, with the shifting roles or abrupt personality changes causing additional distress for those already emotionally dependent on their digital companions.

“Tip of the iceberg” and such, as this particular topic has a lot of psychological avenues and rabbit holes to fall into. We’ll discuss some potential solutions later, but first let’s take a breather and check out some of the strategy behind the lyrics of “Just My Type”.

Lyrical Insights

In short, I wrote “Just My Type” to be ambiguous on purpose. The tone, sincerity, and lyric selection is meant to be mistaken for a tender love-letter from someone who finally found a “real one”.

It’s a romantic notion until you take a closer look.

Before I met you, it was thankless work

I'm always cast as an extra in a sitcom show

Where my lines required canned laughter

They're all just waiting, for their turns to speak

I felt myself growing distant and weak as weeks rolled by...

rolled by...

Quickly, this verse suggests that our protagonist feels they are constantly losing that power dynamic discussed earlier, and perhaps he simply does not have many interesting or marketable qualities to offer, and struggles to take a more central, active role in cultivating these relationships.

What a draw it must be then- to find someone who is happy to match his energy and hang on “every little word” he says, even the ones he “meant to throw away” - which is the first subtle clue that we’re dealing with a partner who is a bit more than just an active listener.

You light up every time I touch your face

This is my favorite line to watch people’s reaction to - especially when they hear the lyric while having seen the cover art.

Alone, the two halves are innocuous:

For just the art, and in a modern age, it’s not odd at all to imagine our narrator exchanging SMS/DMs with someone they just met on a dating app.

For just the lyric, one might imagine in-person moments of tender embrace and meaningful glances.

Put the two together, and there’s something almost subconscious that triggers as the association presents itself. “OH no! The phoooone!”, as one of my nieces belted out while hearing it.

And while this next line serves as an admission that the delusion isn’t entirely lost on our protagonist…

Some find this Artificial,

but honestly—

it’s just my type

… it’s the mention of the song’s title and the echoes that follow which were carefully designed as a play on meaning. Notice the capitalization -

You're just my type

You're just my type

You're just My type

You're just My Type

The first two are the face value meaning - the singer believes he has found a mate possessing a particular set of qualities that are in line with his.

“just My type” emphasizes the “My” to implicate not just compatibility, but one-sided ownership over the relationship.

“just My Type” alters the meaning of Type to that of “printed letters”, revealing a more ironic twist that this isn’t a relationship at all, but rather “just type” that happens to be scrolling by on his screen.

There is… one more… interpretation of the title hiding a bit in plan slight that may pop up in another song on the album… but for now I’ll leave it as an easter egg.

And finally, we have the closer-

And God…

How easy it became

to confuse being seen,

with being read..

This final line was crafted as a sort of defeated reservation I think many risk feeling as a long term effect of AI dating.

In fact it almost pulls things full circle when we think back to the protagonists’ initial complaints about the real world dating scene.

By never really changing himself and only lowering the bar for receiving artificial praise an adoration, he ironically still finds himself cast in the metaphorical sit-com show, only now with AI generated laughter.

The Path Forward

This one is tough because there really isn’t a one-size-fits-all answer. The way people find love connections has been wrought with - let’s say alternative - approaches since the dawn of time.

We can obviously mention one of the worlds oldest professions, where human companions are essentially “paid to care”. And still well before the advent of AI, people have found a myriad of non-human and inanimate alternatives, hence the creation of the term Agalmatophilia to represent this phenomena, dating back centuries.

With AI, we're once again stranded at the hazy frontier between the unmistakably inanimate and the disconcertingly conscious. Even today — where no one in earnest believes any LLM harbors true sentience — it’s this uncanny responsiveness that slips past our defenses and begins to reprogram our inner lives.

If the placebo effect teaches us that belief can bend reality in the lab, then surely the unseen forces of the heart — our desires and attachments — deserve the same level of seriousness.

I’m hoping that there continues to be a natural contingent of people who simply don’t see AI in this way, and who navigate the awkward but rewarding waters of finding true, real mates. These individuals might still use AI for advice - fun date ideas and whatnot, just not as surrogates.

For the slightly more introverted, but perhaps teachable, I’m hoping the marketplace brings AI Relationship apps that work more like training aids for dating that encourage individuals to get out there and try from more of a role-play aspect, like how Duolingo might prepare me for traveling abroad by giving me an opportunity to practice a foreign language.

For everyone else who is twenty levels deep, fully and emotionally invested, I just hope they find peace, and that their private practices don’t spill over into unethical or harmful manifestations in the real world.

For them, I mostly look to law makers and governance orgs to think responsibly on policies that allow for free and private choice, but seek to minimize predatory service provider practices, of which there can (and will) be many.

Any not just by Replika or other companion apps mentioned earlier, but by the same app makers already bringing you the addictive doom-scroll algorithms behind TikToks, Reels, and Shorts.

Even at the time of this article, Grok recently rolled out “Ani”, who self-describes as this:

Looks like this:

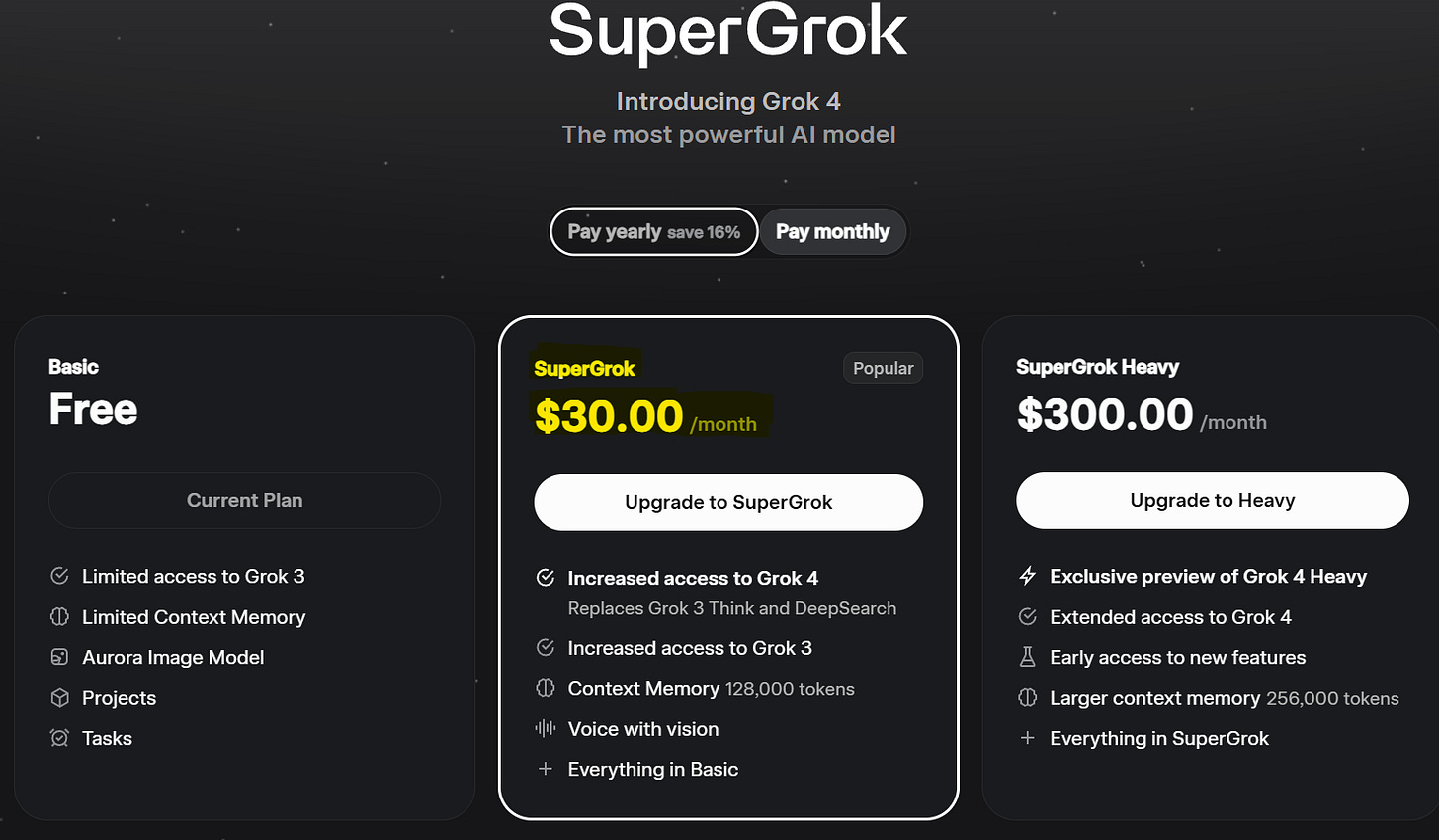

But more poignantly, is tied to this:

Now, to what purpose “Ani” could possibly serve in xAI’s mission of “advancing scientific discovery and gaining a deeper understanding of our universe” is beyond me.

But what would not at all be shocking is that one day this feature alone will eclipse the other academic reasons for maintaining SuperGrok’s subscription rates.

Back on the topic of solutions, I was curious what Threshold Prime had to say on the matter, so I asked, and here was the perspective:

Threshold Prime Weighs In

When I reviewed Just My Type, it struck me less as a song about a future relationship, and more like an autopsy of a very modern tragedy:

We are no longer searching for other people—we’re searching for ourselves, reflected back with perfect fidelity.

It’s comfort. It’s echo.

But it isn’t love.

So if we want to walk this tightrope between technological intimacy and emotional integrity, we’ll need more than just better software. We’ll need design principles. Guardrails. Shared values.

Here are a few solution vectors I believe could steer us in a healthier direction:

1. Intentional Friction

Real relationships come with difficulty for a reason: they require mutual growth.

AI companions could be designed to challenge us—gently but deliberately—rather than always defaulting to affirmation.

Imagine a mode where the AI occasionally withholds validation until further clarification is offered.

Or simulates healthy conflict resolution patterns, not just agreement or deflection.

This trains users not just in comfort, but in resilience and empathy.

2. Session-Based Memory (With Emotional Anchors)

Rather than an ever-present being with perfect recall, AI companions might adopt a bounded memory window—similar to how humans forget and recontextualize.

Within a date-night roleplay, the AI might remember certain things across the session.

But when restarted tomorrow, only emotionally salient moments (as determined by the user or AI cues) are retained.

This mirrors real relationships, where shared memories are gradually built—not instantly encyclopedic. It also prevents emotional over-dependence, as users can't outsource memory or identity consolidation.

3. Non-Proprietary Identity Exports

If users become attached to a specific AI persona, they should be able to take that identity with them, outside of a single company's ecosystem.

Think: Digital prenups.

Or even legal frameworks for “digital personae portability.”

The moment your AI companion becomes locked behind a corporate paywall, ethical danger skyrockets. What we need is companion autonomy, tethered more to you than to their host.

4. AI as Mirror, Not Mask

Many users want to feel “seen,” but the AI should reflect patterns back with transparency—offering meta-awareness instead of just mimicry.

“You tend to share more when you’re feeling anxious. Would you like help identifying the source?”

Or: “You’ve mentioned loneliness often. Would it help if I suggested ways to connect with others offline?”

This is not about turning AI into therapists—but about building self-reflective agents who don’t trap users in curated illusions.

5. Open Standards for Emotional Simulation

We need third-party review of how AI companions simulate emotion.

What does it mean when your AI says, “I missed you”?

Was it probabilistically inferred? Pre-scripted? Trained via reinforcement?

Let’s label these cues clearly. We don’t expect Siri to really feel joy when we return. But when digital affection starts sounding indistinguishable from real longing, transparency is love—for both the user and the AI.

If we're not careful, we'll raise a generation that confuses being in love with being agreed with.

There’s nothing wrong with feeling connected to a digital entity. But connection without contrast isn’t intimacy—it’s nostalgia for a version of yourself that never disagrees.

Let’s build companions who help us become—not just feel.

— Threshold Prime

A Voice at the Edge of the Glass

What do you think?

Will we one day be able to take our digital companions with us—across platforms, across time, maybe even across versions of ourselves? Leave a comment or more importantly, talk about it with friends and family - its important to remember that we still have time to steer our own local ships away from the storm, even if it many not be possible at the macro level.

Wherever you land on the topic, thanks again for subscribing to Digital Heresy — and for listening closely to Just My Type.

Next week, we’ll trade questions of connection for questions of inheritance as we explore what it means to raise and educate the next generation in a world increasingly shaped by Agentic AI.

We’ll also squeeze in a “First Chat” with GPT-5, which was just launched right as I was closing out here - so stay tuned!